Dear Medical Educator,

The journey from earlier versions of GPT to GPT-4o focused on scaling up model size and increasing parameters. It results in significant performance improvements. But OpenAI recently took a different path, aiming to push beyond mere scale.

Why?

Quite simply, because they’ve nearly maxed out available human-created textual data, and further parameter scaling has diminishing returns. The focus now is on making models think more like humans by using methods that allow for nuanced reasoning, planning, and adaptability.

Those who have read the post on o1 will remember the PhD-level AI model, o1, and how to use it for medical education.

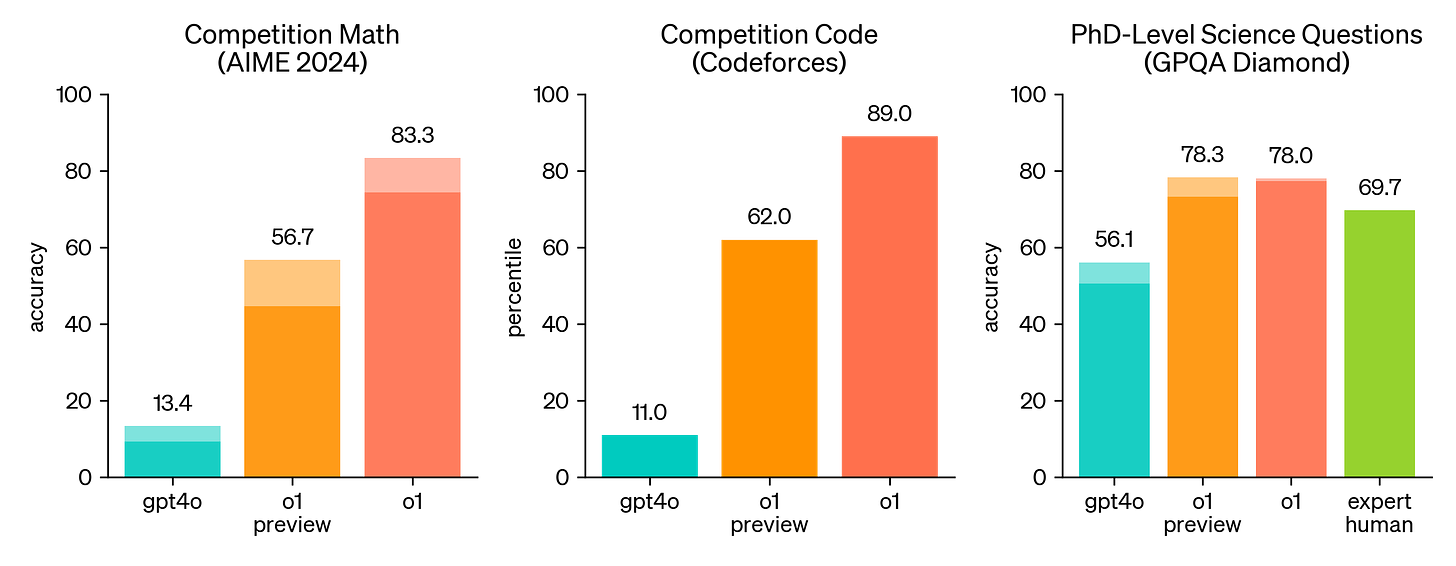

As you can see, o1’s performance is way better than GPT-4o in science-related tasks.

What Makes PhD-Level AI (o1) Different

While OpenAI hasn’t officially confirmed every technique, here are some that likely contribute to this huge performance improvement:

Chain of Thought (CoT): Rather than providing an answer immediately, the model is guided to think step-by-step natively, emulating a human-like reasoning process.

Reflection: The model is likely capable of evaluating its own responses, reflecting on areas for improvement or errors before delivering the final output.

Monte Carlo Tree Search (MCTS): MCTS is a decision-making tool that uses a tree structure to explore different solution paths. It simulates various options, branching out like a tree, and selects the most promising path based on past successes. This approach is especially effective for tasks requiring strategic thinking, like games or planning.

Source: Wikipedia Reinforcement Learning (RL): The insights from the “thinking” processes are combined with reinforcement signals. For example, if the model’s thinking highlights a logical error and a human feedback signal confirms it, the model receives a stronger learning signal, reinforcing the need to avoid similar errors in the future.

So, the new species (AI models) that we create are learning how to “think”.

System 2 Thinking: A New Paradigm for AI

Previous models often provided quick answers without “thinking”. While a “think step by step” prompt was effective, as was pointed out in the beginner-level tips for using AI in medical education, it was a workaround rather than a solution.

PhD-level AI models now embody System 2 Thinking—a concept from psychology referring to slower, deliberate, logical processing, similar to how humans solve complex problems. This native “thinking” capability means the model can better evaluate context, plan answers, and “reason” deeply. It’s no longer just parroting back text; it’s actually solving problems more like a human.

The Trade-Offs: Speed and Cost

There is, however, a price for this advanced reasoning power. These System 2 models are significantly slower and roughly six times more expensive to run than GPT-4o. As such, it’s essential to use them strategically. While they excel in high-stakes tasks requiring nuanced reasoning, they’re overkill for simpler, everyday queries.

However, thanks to Moore's Law—the trend where computing power doubles about every two years while costs decrease (and some even predict it may increase at a squared rate)—this expense is likely to drop over time. This means advanced models will become faster, cheaper, and more accessible, eventually allowing broader use across various applications.

One day, we eventually will reach a point that human reasoning for any task is no longer feasible. It is where artificial general intelligence (AGI) era begins, which we should be prepared for because of the really important consequences it can lead to.

Yavuz Selim Kıyak, MD, PhD (aka MedEdFlamingo)

Follow the flamingo on X (Twitter) at @MedEdFlamingo for daily content.

Subscribe to the flamingo’s YouTube channel.

LinkedIn is another option to follow.

Who is the flamingo?

Related #MedEd reading:

Kıyak, Y. S., & Emekli, E. (2024). ChatGPT prompts for generating multiple-choice questions in medical education and evidence on their validity: a literature review. Postgraduate medical journal, qgae065. https://academic.oup.com/pmj/advance-article/doi/10.1093/postmj/qgae065/7688383

Kıyak, Y. S., & Kononowicz, A. A. (2024). Case-based MCQ generator: A custom ChatGPT based on published prompts in the literature for automatic item generation. Medical Teacher, 1-3. https://www.tandfonline.com/doi/full/10.1080/0142159X.2024.2314723