How I Built an AI Feedback App (Free to Use) Without Knowing How to Code

As a medical educator, you need to read this to see what AWESOME things you can do

Dear Medical Educator,

What if I told you I built an AI feedback app that generates MCQs, gives instant feedback based on student answer, is completely free to use with no payment required, and all without knowing how to code?

Sounds unbelievable. But I did it.

It began with a simple frustration: I wanted our students to practice anytime, anywhere, without waiting for medical educators and without paying for expensive platforms.

In this post, I’ll explain how I managed to do this. This post will be longer than my previous ones, but it’s necessary because I’ll explain everything in detail.

Building Without Coding

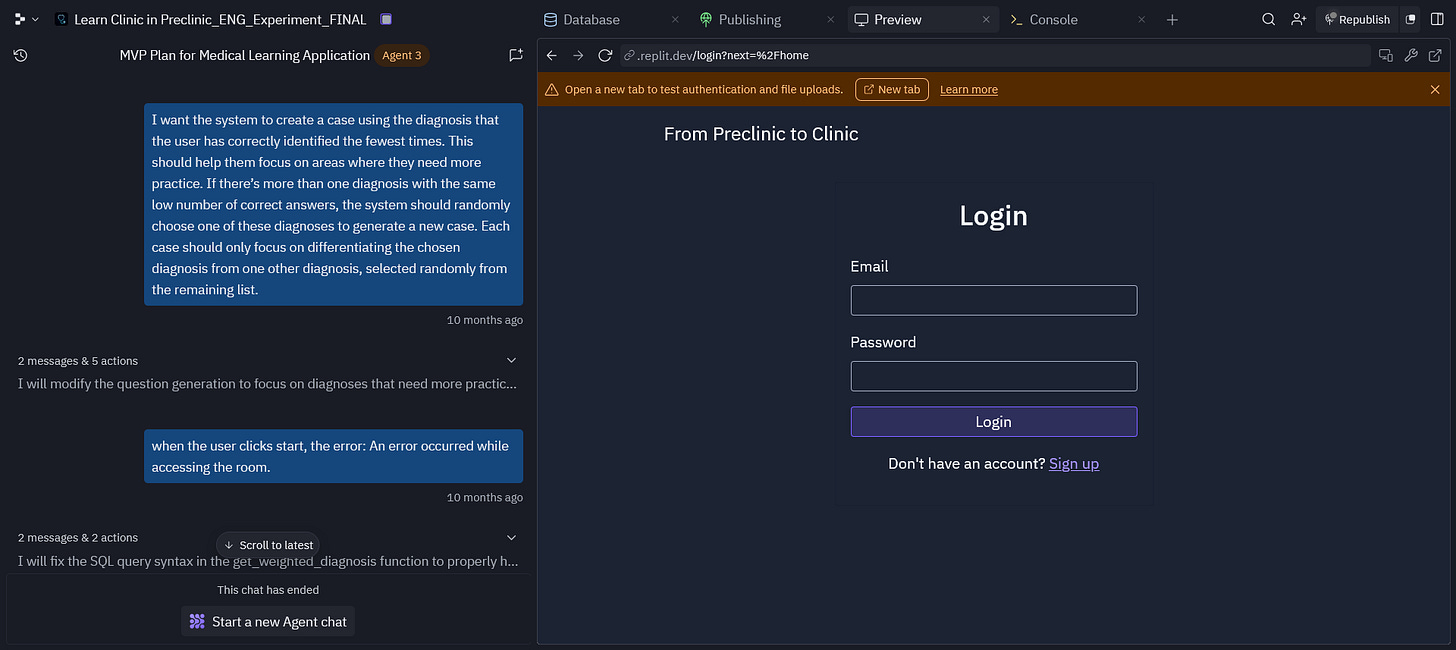

I signed up for Replit, basically a coding platform for people who don’t know how to code. In Replit, all you have to do is describe what you need in plain language.

I described what I needed to the Replit's AI agent. It generated the code, I tested if it worked, then I told it what didn’t work (in plain language), and it corrected the code, an iterative back‑and‑forth process.

By testing, I don’t mean a complex process, I simply clicked the buttons in the app to see if it behaved as I intended.

Finally, it created the app I wanted. It took hours for me to get, in summary:

Room creation (as many as needed) for students by admins.

Assigning 5 diseases from a list I created (with as many as I want) to specific rooms.

Assigning students (who signed up for the app) to specific rooms.

Allowing students to practice the specific diseases I assigned.

Completion was achieved when students gave two correct responses for each disease (10 MCQs + feedback).

Here is a screen recording from the app, shown from the student’s perspective in a test room.

At first, I used GPT-4o-mini. Not open-source, but ready to use, as an API. Think of an API as a pipeline from the service provider, in this case, OpenAI, that connects with your app so it can run the AI model for whatever you want.

This way, I didn’t need to run an AI model locally, no need for GPUs, OpenAI handled it for me. I had to start simple and easy, since running open-source models locally is more complex. But the downside was the cost; every request added up over time. We also depended on OpenAI and had to send data to them (even if it contained no personal information).

The app worked. Then I clicked deploy in Replit, it deployed my app to their servers and gave me a link to access the app. Remember, I don’t know how to code, and yet I had just deployed a full web app. I shared the link with our students so they could sign up.

It was time to test its effectiveness, so I designed an experiment.

It generated an MCQ for each of the 5 diseases. The student chose an option, and it gave feedback based on the student’s answer. I tailored it for preclinical students who are new to clinical cases, prompting it to provide feedback at their level and to incorporate basic sciences like pathophysiology. Students practiced the same five diseases for five days as spaced repetition, spending less than 30 minutes per day accessing the app from their devices.

Students liked it. We found that they learn from it. It also got published in Journal of Surgical Education.

This meant that I developed and deployed a web app that runs with an LLM and demonstrated its effectiveness! Without knowing how to code!

This was incredibly rewarding. It took a lot of effort, but without AI I could never have done because I don’t know how to code. I didn’t even know what an API was or how to get an API key. I asked ChatGPT how to get one, followed the steps, and Replit handled the rest.

If you would like to build and deploy your own app without writing any code, I recommend Replit. Here’s a sign-up link: https://replit.com/refer/yskiyak. If you’d prefer not to give me referral credit, you can use this alternative link.

Everything was working well, but I had one big challenge: scaling.

The Scaling Problem

OpenAI charges per use.

40 students × 5 days = about $0.50 in fees.

Replit's hosting + processing costs = $3-5.

Tiny money, until you multiply it by 400 students per year instead of 40 students for 5 days.

That’s when I realized: if this was going to reach all our students, it needed to be free. And the only way to make it free was to run it ourselves, on our own servers, with an open-source AI model.

I had already experimented with running some small LLMs on my computer, which has very modest GPUs, so I was familiar with the idea.

But I wasn’t aware of the difficulties that lay ahead.

Deploying on Our Servers (The Most Painful Part)

I downloaded codes of my app from Replit (it's a small zip file) and tried to deploy it on our university’s server.

I don’t know Linux. I don’t know Docker. I don’t know deployment.

So here’s what happened:

First I asked ChatGPT how I could even do this, and it explained the steps.

I asked our IT department for the credentials to access the server. The just gave the credentials, and did not to anything more.

I learned there is something called "terminal" that you can use to access the server.

I tried to run the app locally.

It broke.

I copied the error into ChatGPT.

ChatGPT gave some commands.

I pasted them.

Over and over. Many many many times.

It was really difficult for me. But then, it worked, because I was determined to push it until it worked (I also believe you can do the same if you’re sufficiently determined and have the courage to fail many times). I felt hopeless sometimes but the app opened in my browser finally. Running on our servers.

That tiny victory was euphoric. (must be an easy task for technical people but big for me)

Now I had eliminated the hosting and processing fees I used to pay to Replit. But one problem still remained: the AI service, we were still dependent on OpenAI’s API, the pipeline that provides us access to GPT models running on their servers, and they charge us based on our usage.

Running Open-Source Models

Step two: swap GPT-4o-mini for an open-source model.

Open-source models allow you to run LLMs locally on your own devices if the hardware is powerful enough (especially in terms of GPUs). No internet connection is required to run it. You simply download the model (which can be a few GBs or even hundreds, depending on its size) and run it for chat, feedback, or whatever you need.

In summary, open-source models don’t charge per use. You download them, run them locally, and all you need is GPU power.

Problem: our GPUs were too weak.

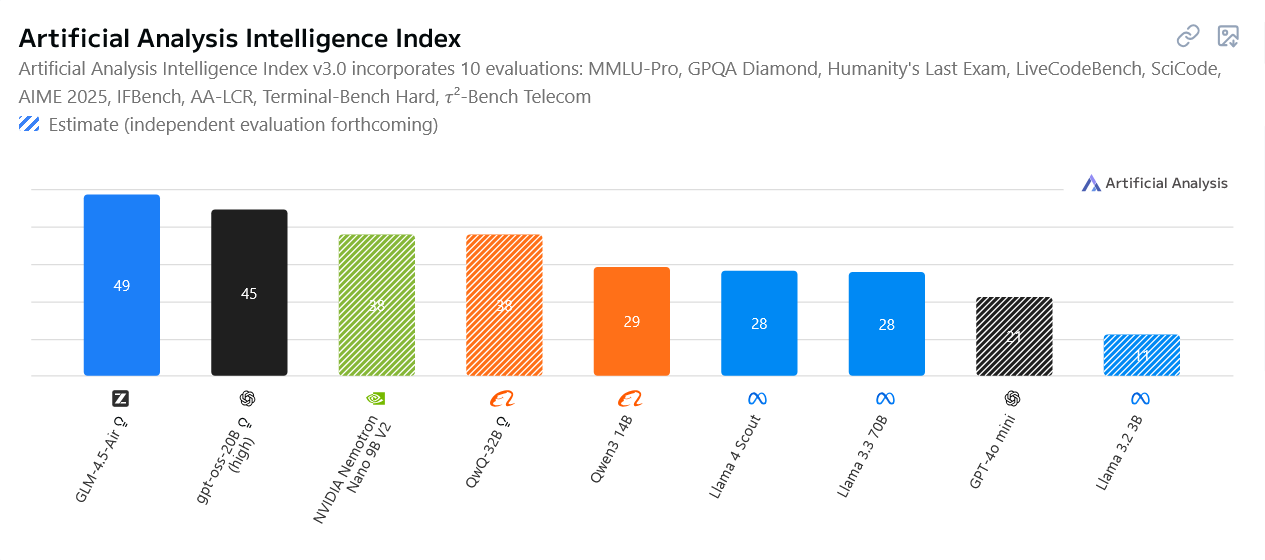

I managed to run Llama 3.2 3B Instruct, but the performance wasn’t enough. The inaccuracies (hallucinations) in the content was high while GPT-4o-mini provided no hallucination. This is not surprising because we know that Llama 3.2 3B Instruct is a really small (and therefore less performance) model compared to GPT-4o-mini, as shown in the performance comparison figure below.

To get similar levels of performance, I need to run at least Lllama 3.3 70b or its equivalents. But I target GPT-OSS-20b, a more powerful open-source model by OpenAI.

I asked ChatGPT what I would need to run it, and it suggested Apple’s Mac Studio with a 28‑core CPU, a 60‑core GPU, a 32‑core Neural Engine, 96 GB of unified memory, and 1 TB of SSD storage. Its cost is around $5000.

So I hit pause.

The Next Step

Now I need to write a grant proposal for stronger GPUs. With them, I could run higher-end models locally; no OpenAI bill, no hosting fees, no middlemen.

If that works, every student in our program (400 per year, and beyond) could practice 24/7 for free.

Why I’m Sharing This

Because the myth is: “I can’t build tech. I don’t know how”.

The truth is: you don’t need to know. You just need to:

Use Replit (180$ per year).

Ask ChatGPT when you need more.

Follow instructions.

Copy, paste, test, repeat.

It’s sometimes messy. It’s slow. It’s frustrating. But it’s also possible.

I’m not special. I’m just stubborn. If you’ve been sitting on an idea because you “don’t know tech”, this is your reminder: you don’t need to.

Yavuz Selim Kıyak, MD, PhD (aka MedEdFlamingo)

Follow the flamingo on X (Twitter) at @MedEdFlamingo for daily content.

LinkedIn is another option to follow.

Who is the flamingo?

Related #MedEd reading:

Kıyak, Y. S., Emekli, E., İş Kara, T., Coşkun, Ö., & Budakoğlu, I. İ. (2025). AI Teaches Surgical Diagnostic Reasoning to Medical Students: Evidence from an Experiment Using a Fully Automated, Low-Cost Feedback System. Journal of Surgical Education, 82(10), 103639. https://authors.elsevier.com/a/1lYn9_hx1U%7EKXy

Çiçek, F. E., Ülker, M., Özer, M., & Kıyak, Y. S. (2025). ChatGPT versus expert feedback on clinical reasoning questions and their effect on learning: a randomized controlled trial. Postgraduate Medical Journal, 101(1195), 458–463. https://academic.oup.com/pmj/advance-article/doi/10.1093/postmj/qgae170/7917102

Kıyak, Y. S., & Emekli, E. (2024). ChatGPT prompts for generating multiple-choice questions in medical education and evidence on their validity: a literature review. Postgraduate Medical Journal, 100(1189), 858-865. https://academic.oup.com/pmj/advance-article/doi/10.1093/postmj/qgae065/7688383

Salam Dr. Kiyak,

This is such a timely and helpful post. I’m working on a similar project—though for profit, focused on the epilepsy board exam here in the U.S. I also ran into the scaling-cost problem when I tried using the OpenAI API to generate MCQs. I considered switching to a local LLM, but it never moved past the “thought about it” stage. Your research on the cost of building this made me think I will probably use my own chat interface for now (since my need is periodic, controlled by me), and hopefully, soon, I will be able to build a local LLM with comparable power.

And I completely agree with you. This is an exciting time for us non-coders. With the help of Replit and GPT-5, I was able to build a full-stack web app (front end, back end, database, subscription, payment) with only minimal coding knowledge.

Cheers,

Erafat

This is really inspiring! I’m a teacher educator and I’ve been exploring how to build a similar AI app, but I’m unsure where to start—or if there’s a simpler path than what I’m planning to do. Since I’m not very familiar with coding, deciding on the first step has been challenging. I’ve been following your work and would love to connect and learn from your experience.